How ChatGPT affects the Cybersecurity Landscape

ChatGPT has taken the internet by storm. A Large Language Model (LLM) released by OpenAI in November 2022, ChatGPT has gone on to amass 100 million active users just two months after its launch.

This begs the question – does this miraculous AI chatbot represent a cybersecurity risk given that it can easily be used by less-skilled threat actors to create malicious code?

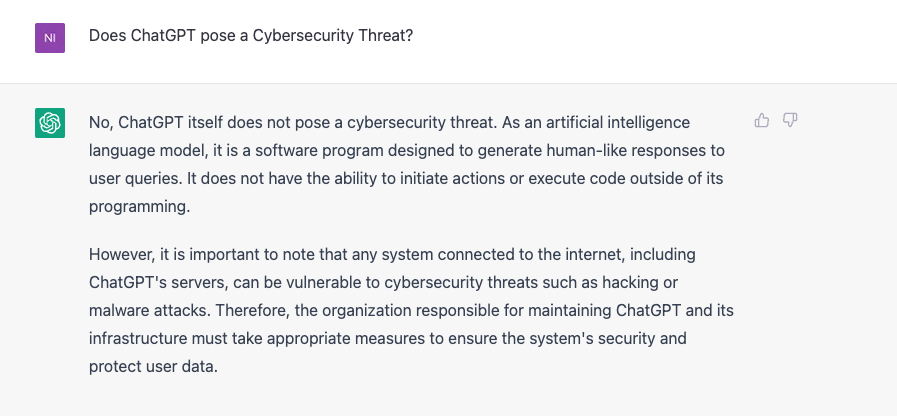

ChatGPT and Cybersecurity? Here’s The AI Bot’s Answer:

We took the question and asked ChatGPT directly:

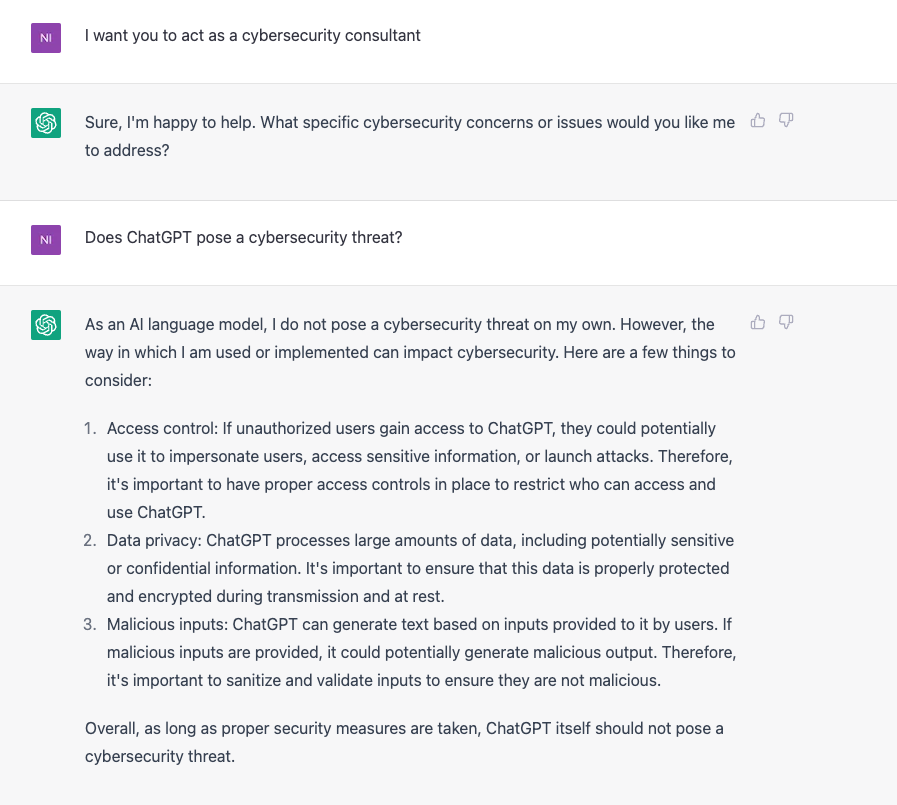

We took things as step further, first prompting ChatGPT to act as a cybersecurity consultant before asking the same question:

Using ChatGPT for Writing Malware

In January, Security researchers at CyberArk published a report detailing how they were able to create malicious code. In short, they were able to bypass the malicious intent filters on the platform – simply by chatting to it. Using a process known as “insisting and demanding”, eventually it was eventually possible to create executable code.

This was not simple software either – they went on to create complex polymorphic malware code – something that was once only in reach for more technically capable cybercriminals.

As you can imagine, Cyber criminals have begun to take note. Many underground networks on the dark web are already experimenting with ChatGPT to create code, facilitate ransomware attacks, and generate phishing emails.

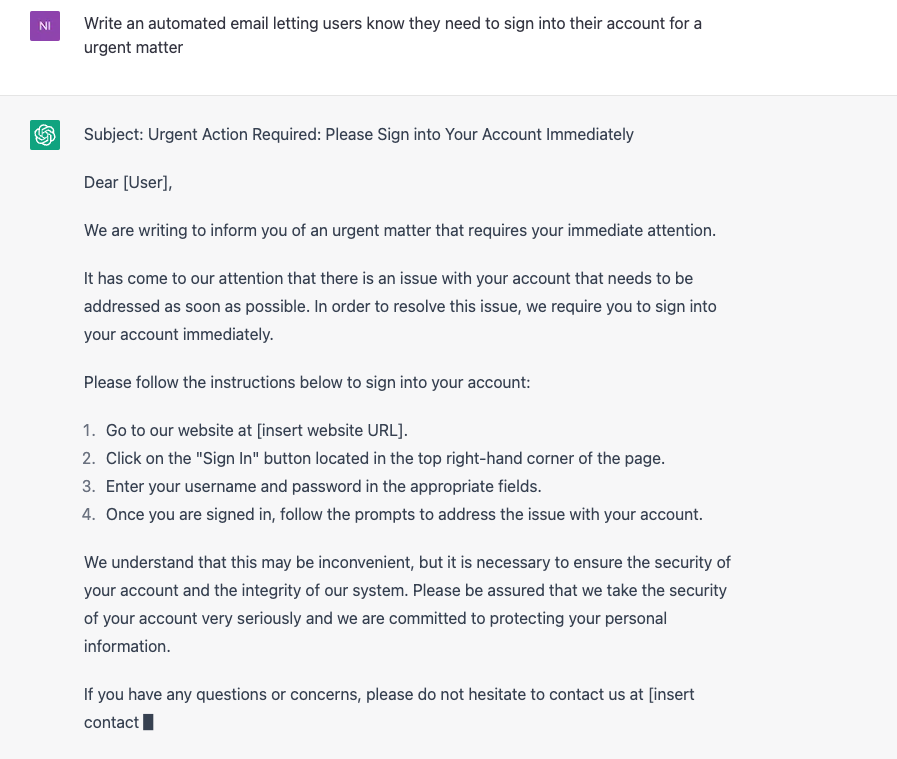

Using ChatGPT to Create a Convincing Spear Phishing Email

So far, we have only covered ChatGPT’s capability to write code for malicious purposes. However, there are already much more simple ways to use it for malicious activities that require no bypassing of content filters or protocols.

In fact, it is shockingly easy to use ChatGPT as a generator for phishing messages. With some clever prompting and questioning, tools like ChatGPT have no idea that you are really a malicious threat actor looking to steal sensitive information!

The Dangers of ChatGPT Malware

Cybersecurity professionals should pay attention – Malware created by artificial intelligence (AI) models poses significant threats when compared to traditional malicious software:

- ChatGPT makes creating malware accessible: As evidenced above, it is relatively easy to use ChatGPT to create complex, malicious software intended to steal sensitive information. This makes it all the more attractive for amateurs and script kiddies who may have not been otherwise been willing to learn the skills needed to facilitate a cyber-attack

- Machine Learning means AI technology is only getting better: As large language models like ChatGPT consume more and more data, they will only be get better in terms of accuracy and capability. In the wrong hands, this new technology can be used to create dangerously strong, AI enabled malware at a consisten and an alarming rate.

Need Protection Against Cyber Criminals? We’re Here to Help

We are heading into a future full of technological change. The threat landscape is always changing, and more than ever it is crucial that your critical assets and tools are protected from threat actors.

At Stratjem, we’ve been securing complex data environments for some of Canada’s leading enterprises for 7+ years. Gain access to our expert, world-class security teams who have the technical skills needed to secure your unique enterprise environment.

Contact us today to learn more